SEO for LLMs in the AI era

People increasingly ask AI assistants for answers. Those responses are synthesized from web sources that model providers crawl, index, and score for reliability.Short videos

Social and video build reach, but text pages that act as a single source of truth remain the most consistently crawlable, linkable, and citable.

Emerging conventions like llms.txt aim to help AI systems locate your most authoritative, up‑to‑date content and understand your crawl/use preferences. Treat this as an addition to not a replacement for robots.txt and sitemaps.

To be discoverable and quotable by LLMs:

- Publish canonical, up-to-date pages for FAQs, product/service specs, policies, About, and Locations;

keep them readableand unambiguous. - Add

schema.orgmarkup (Article, FAQPage, Product, LocalBusiness), clear H1–H3, lists, and tables to improve machine readability. - Maintain XML sitemaps with accurate lastmod; expose visible “Updated on” timestamps or a simple changelog.

- Use consistent canonicals, logical internal links, and

fast pages(Core Web Vitals) to enable efficient crawling and ranking. - Manage crawler access and rate limits in robots.txt; consider model-specific user agents; publish an

llms.txtfile to point to canonical sources and update cadence as the ecosystem matures. - Strengthen E‑E‑A‑T: author names and credentials, citations, organization details, and

third‑party references. - Track AI‑related bot activity and referrals; audit how your content is summarized in AI answers and iterate.

Invest

AI‑enhanced search rewards sites that are structured, current, and trustworthy. Invest in technical hygiene and clear text now to become the source AI prefers to cite.

Test the results!

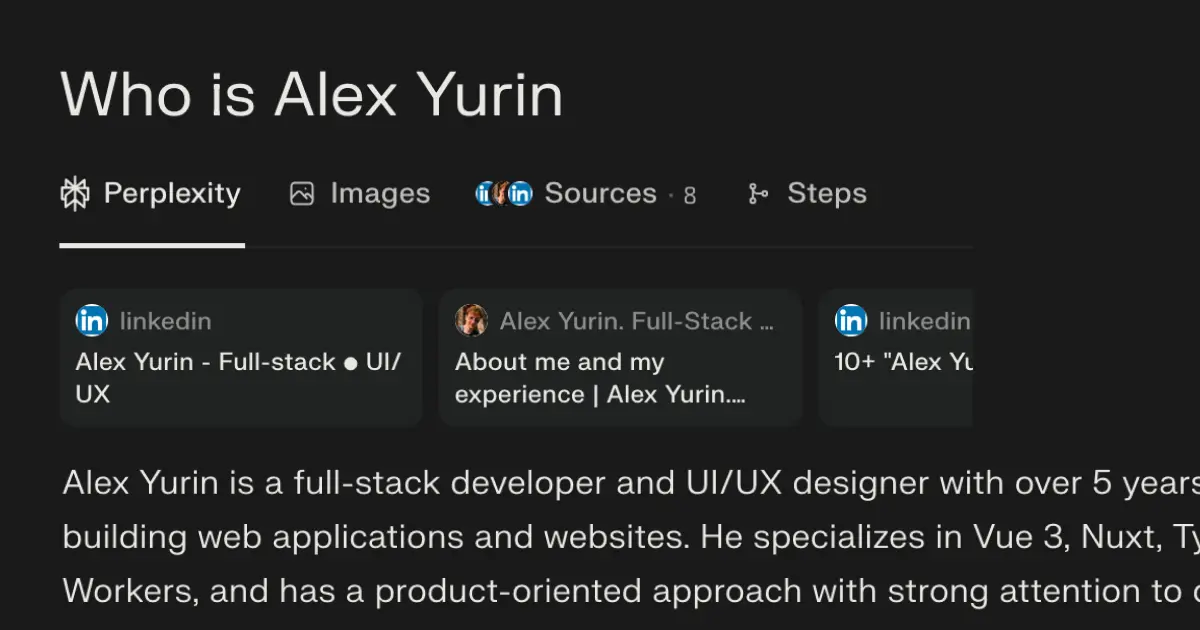

Ask the chatbot

As soon as the site appears in search results just type site:yurin.dev into Google

Go to any popular chatbot that can search for information on the internet and ask it!

What does this mean?

Currently, the models know me. By requesting my first and last name, they can find out what I do, my technical stack, and other details.

In the future, the model will give the same answer but to the request Find me a reliable developer.